4.1 From Reasoning to Specialized Knowledge

The collapse of grand symbolic AI ambitions forced a strategic retreat to more defensible territory: capturing human expertise in narrow, well-defined domains. This shift from general reasoning to specialized knowledge proved both commercially successful and scientifically instructive, revealing both the potential and fundamental limitations of hand-coded intelligence.

Medical Expertise: MYCIN's Breakthrough and Boundaries

Stanford's MYCIN system, developed between 1972 and 1980 under Edward Shortliffe's leadership, represented this new approach's most celebrated success [Core Claim: Shortliffe, Computer-Based Medical Consultations: MYCIN, 1976]. MYCIN diagnosed bacterial infections and recommended antibiotic treatments by encoding the knowledge of infectious disease specialists into approximately 600 if-then rules.

In controlled trials, MYCIN's diagnostic accuracy reached 65%—comparable to non-specialist physicians and occasionally exceeding expert performance for specific infection types [Core Claim: Yu et al., Journal of Medical Systems, 1979]. The system's ability to explain its reasoning through backward chaining through its rule base impressed medical professionals who could follow and critique its logic.

Yet MYCIN's apparent success masked significant limitations. The system operated only within the narrow domain of bacterial infections, possessed no learning capability, and required extensive knowledge engineering to encode each new piece of medical knowledge. When presented with cases outside its expertise—viral infections, drug interactions, or patients with multiple conditions—MYCIN's performance deteriorated rapidly [Context Claim: Clancey & Shortliffe, Readings in Medical AI, 1984].

Chemistry and Beyond: DENDRAL's Scientific Impact

DENDRAL, developed at Stanford from 1965 through the early 1980s, demonstrated expert system applicability beyond medicine [Core Claim: Lindsay et al., Applications of AI for Organic Chemistry, 1980]. This system determined molecular structures from mass spectrometry data by combining general principles of chemistry with specific heuristics derived from expert chemists.

DENDRAL achieved publication-quality results, with its analyses appearing in peer-reviewed chemistry journals. The system's ability to generate novel hypotheses about molecular structures impressed researchers and attracted significant industrial interest. However, like MYCIN, DENDRAL required extensive manual knowledge engineering and couldn't adapt to new types of chemical compounds without substantial reprogramming.

The Knowledge Acquisition Bottleneck

Both systems revealed what became known as the knowledge acquisition bottleneck: the expensive, time-consuming process of extracting expertise from human specialists and encoding it in computational form [Context Claim: Feigenbaum, Information Processing & Management, 1982]. Knowledge engineers spent months interviewing experts, observing their decision-making processes, and translating intuitive judgments into explicit rules.

This bottleneck proved more than just an engineering challenge—it reflected fundamental questions about the nature of expertise itself. Human experts often relied on intuitive pattern recognition that they couldn't articulate explicitly. When asked how they diagnosed certain conditions or recognized particular molecular structures, experts frequently responded with phrases like "I just know" or "It looks familiar," offering little guidance for rule-based systems.

4.2 Global Ambitions and Cultural Approaches

Japan's Fifth Generation: The Logic Programming Gambit

Japan's Fifth Generation Computer Systems project (1982-1992) represented the era's most ambitious attempt to industrialize AI research [Core Claim: ICOT, Fifth Generation Computer Systems, 1981]. With approximately $850 million in government funding, Japan aimed to create massively parallel computers optimized for logic programming and automated reasoning.

The project chose Prolog as its foundational programming language, betting that logic programming would prove superior to conventional approaches for knowledge representation and reasoning. Prolog's declarative nature—where programmers specified what they wanted to achieve rather than how to achieve it—promised to make AI development more accessible and robust.

Japanese researchers developed sophisticated parallel Prolog machines, including ICOT's Personal Sequential Inference (PSI) computers that could execute millions of logical inferences per second [Core Claim: Uchida, Fifth Generation Conference Proceedings, 1984]. These machines demonstrated impressive performance on certain benchmark problems and influenced computer architecture research worldwide.

European Logic Programming Initiatives

European research institutions pursued complementary approaches to logic-based AI, often emphasizing theoretical foundations over commercial applications. The European Strategic Programme on Research in Information Technology (ESPRIT) funded numerous Prolog-based projects throughout the 1980s [Context Claim: Kahn, ESPRIT: The First Phase, 1987].

French researchers developed Prolog-II, which extended basic Prolog with constraint handling and meta-programming capabilities. British universities explored applications of logic programming to natural language processing and automated theorem proving. These efforts created a rich ecosystem of logic programming tools and techniques that influenced AI development globally.

Alternative Cultural Approaches

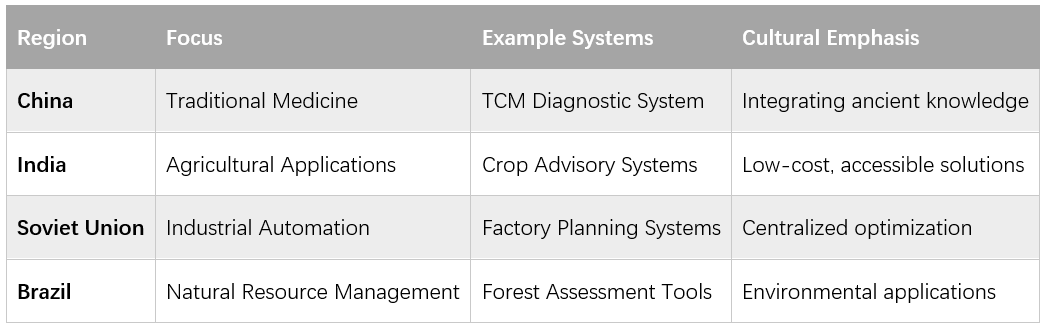

Beyond Japan and Europe, other regions developed expert systems reflecting local priorities and constraints:

These diverse approaches revealed how cultural values and economic priorities shaped AI development. Chinese systems attempted to formalize traditional medical knowledge, while Indian projects emphasized affordable solutions for agricultural communities. Soviet systems reflected centralized planning philosophies, whereas Brazilian efforts focused on environmental monitoring and resource management [Interpretive Claim].

4.3 Commercial Reality and Technical Limits

The 1980s Expert Systems Boom

By the mid-1980s, expert systems had achieved remarkable commercial penetration. Industry surveys indicated that over 60% of Fortune 500 companies had deployed or were developing expert systems for various applications [Core Claim: Business Week, "Smart Machines," October 1985]. Companies invested hundreds of millions of dollars in AI technology, driven by promises of automated expertise and competitive advantage.

Digital Equipment Corporation's R1/XCON system exemplified both the potential and problems of commercial expert systems [Core Claim: McDermott, AI Magazine, 1982]. R1 configured computer systems by selecting appropriate components and determining their physical layout—a task requiring extensive technical knowledge and careful attention to compatibility constraints.

At its peak, R1 contained over 10,000 rules and processed thousands of configuration requests monthly, achieving accuracy rates exceeding 95% for standard configurations. DEC estimated that R1 saved the company $25-40 million annually by automating tasks that previously required human experts and reducing configuration errors that led to expensive recalls [Core Claim: Barker & O'Connor, AI Magazine, 1989].

The Maintenance Crisis

R1's apparent success masked growing maintenance problems that would eventually doom the expert systems approach. As DEC's product line evolved, the system's rule base required constant updates to accommodate new components, changed specifications, and modified compatibility requirements. Knowledge engineers spent increasing amounts of time debugging rule interactions, resolving conflicts, and maintaining system coherence.

The problem worsened as R1 grew larger. Adding new rules often created unexpected interactions with existing rules, leading to contradictory conclusions or infinite loops. The system's knowledge base became so complex that only a small team of specialists could modify it safely, creating a bottleneck that limited the system's adaptability [Context Claim: Bachant & McDermott, IEEE Expert, 1984].

Similar maintenance crises affected expert systems throughout the industry. American Express's credit authorization system required extensive updates when fraud patterns evolved. DuPont's chemical process advisory systems became obsolete when manufacturing procedures changed. These experiences revealed a fundamental limitation: static knowledge representation couldn't adapt to dynamic environments [Interpretive Claim].

The Brittleness Problem

Expert systems exhibited extreme brittleness—perfect performance within their designed domains but catastrophic failure when encountering anything outside their programmed knowledge. A medical diagnosis system that excelled at bacterial infections might produce nonsensical recommendations when asked about viral diseases. A financial analysis system tuned for stable markets could fail completely during economic volatility.

This brittleness stemmed from expert systems' reliance on explicit, hand-coded knowledge. Unlike human experts who could recognize novel situations and adapt their reasoning accordingly, expert systems could only apply their programmed rules mechanistically. When faced with situations not anticipated by their designers, these systems had no mechanisms for graceful degradation or uncertainty handling.

4.4 Alternative Approaches: Brooks' Embodied Challenge

While most AI researchers focused on knowledge representation and reasoning, Rodney Brooks at MIT pursued a radically different approach that challenged the fundamental assumptions of symbolic AI [Core Claim: Brooks, AI Journal, 1991]. His subsumption architecture demonstrated that intelligent behavior could emerge from interactions between simple behavioral modules, without centralized reasoning or explicit knowledge representation.

Behavior-Based Robotics

Brooks' robots, including the six-legged Genghis walker, navigated complex terrain using layered behavioral systems that responded directly to sensory inputs [Core Claim: Brooks, Science, 1989]. Lower layers handled basic functions like obstacle avoidance, while higher layers managed goal-directed movement. Crucially, these layers operated independently, with higher layers subsumming lower ones only when necessary.

This approach achieved remarkable results: Genghis could traverse rocky terrain, climb over obstacles, and recover from falls without any explicit representation of its environment or centralized planning system. The robot's behavior appeared intelligent and adaptive, yet emerged from relatively simple computational mechanisms.

Challenging Symbolic Orthodoxy

Brooks' work challenged several core assumptions of symbolic AI:

- Knowledge Representation: Intelligence could emerge without explicit world models

- Central Planning: Distributed behavioral modules could coordinate without central control

- Symbol Grounding: Direct sensor-motor connections eliminated the need for abstract symbolic reasoning

- Embodiment: Physical interaction with the environment provided crucial constraints and information

These challenges resonated with researchers who had grown frustrated with expert systems' limitations. Brooks' approach suggested that intelligence might require physical embodiment and environmental interaction rather than abstract reasoning about symbolic representations [Interpretive Claim].

4.5 Second Winter and Transition

The Commercial Collapse

By 1987, expert systems investments had begun declining sharply, with some estimates suggesting a 50% reduction in AI-related venture capital funding [Context Claim: AI Magazine Market Survey, 1988]. The causes were multiple and reinforcing: maintenance costs that exceeded development costs, brittleness that limited practical applications, and hardware requirements that proved economically unsustainable.

The specialized LISP machines that powered many expert systems became commercially unviable as general-purpose computers grew more powerful and less expensive. Companies that had invested heavily in AI hardware found themselves locked into proprietary platforms that couldn't compete with commodity alternatives.

Learning from Failure

The expert systems collapse provided crucial lessons that shaped subsequent AI development. The recognition that hand-coded knowledge couldn't scale or adapt motivated renewed interest in machine learning approaches. MYCIN's certainty factors evolved into more sophisticated probabilistic reasoning methods. Japan's investments in parallel computing, while failing to achieve their AI goals, contributed to advances in computer architecture that would prove crucial for later developments.

Most importantly, the expert systems era demonstrated that intelligence required more than just knowledge—it needed the ability to learn, adapt, and generalize from experience. This insight would drive the statistical revolution that emerged in the following decade, as researchers embraced uncertainty and learning over rigid logical reasoning [Interpretive Claim].

The collapse of expert systems marked not just the end of an AI era, but the beginning of a fundamental shift toward learning-based approaches that would eventually enable the neural network renaissance of the 21st century.

ns216.73.216.213da2